Scalable RISC-V CPUs for Data Center, Automotive, and Intelligent Edge

Veyron Series High-Performance Compute Processors

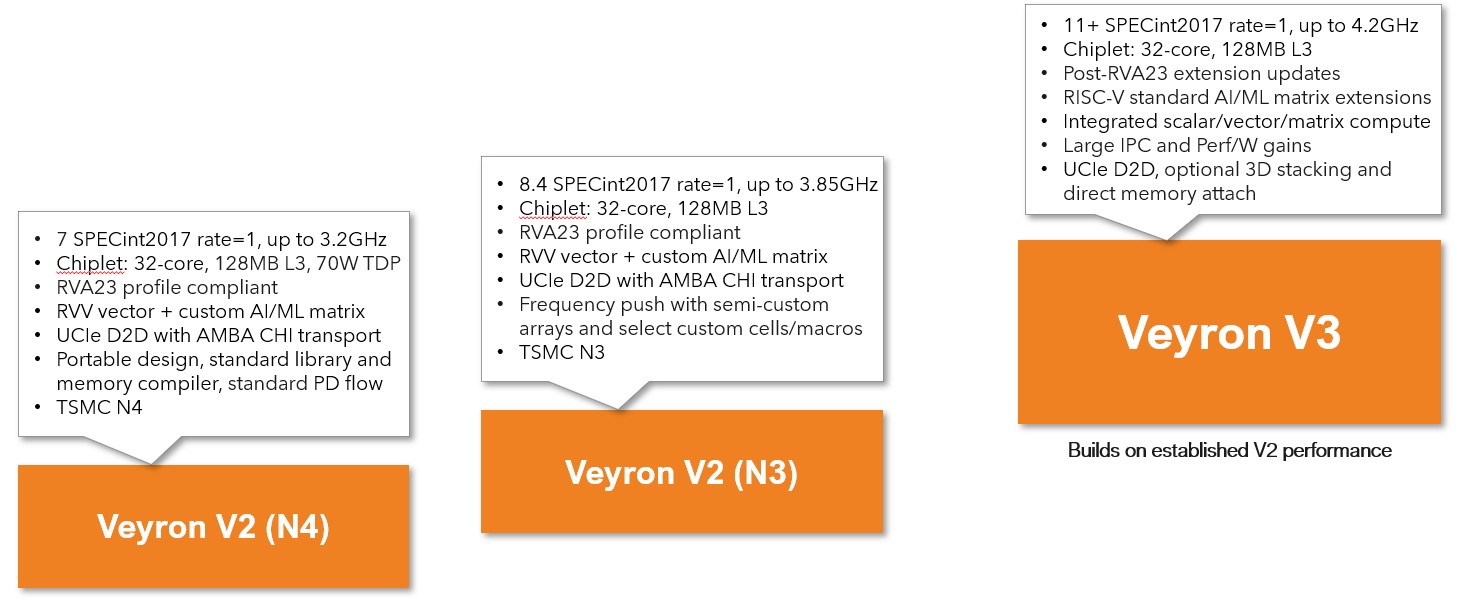

Roadmap

Veyron V2

Ventana’s second-generation high-performance RISC-V CPU delivers a major leap in compute capability, designed for deployment across data center, automotive, and edge applications. Veyron V2 is available as licensable IP for integration into custom SoCs or as a complete silicon platform.

Engineered as a server-class processor, Veyron V2 exceeds the demands of modern, virtualized, cloud-native workloads. Ventana's IP portfolio includes key system-level components such as a RISC-V-compliant IOMMU and supports standard AMBA interfaces, enabling seamless integration with third-party IP and acceleration blocks.

IP available now.

Silicon platforms launching in early 2026.

Modern Architecture, RVA23-Aligned

- Fully compliant with the RVA23 RISC-V specification

- Comparable PPA to Arm Neoverse V3 / Cortex-X4

- Standard AMBA CHI.E coherent interface for SoC and chiplet integration

- Co-architected with Veyron E2 for seamless vector, AI acceleration, and big-little style heterogeneous compute configurations

Extreme Performance and Power Efficiency

- Optimized for high IPC and 3+ GHz core frequency

- 15-wide out-of-order core: fetch, decode, and execute up to 15 instructions per cycle

- Balanced performance-per-watt architecture optimized to scale from hyperscale to edge environments

- Advanced power gating and DVFS support for fine-grained control

- Up to 32 cores per cluster with decoupled front-end and advanced branch prediction

- High-performance 512-bit RVV 1.0 vector unit with INT8 and BF16 support

- Integrated matrix unit delivering up to 0.5 TOPS/GHz/core (INT8)

- Macro-op caching and aggressive prefetching for instruction and data streams

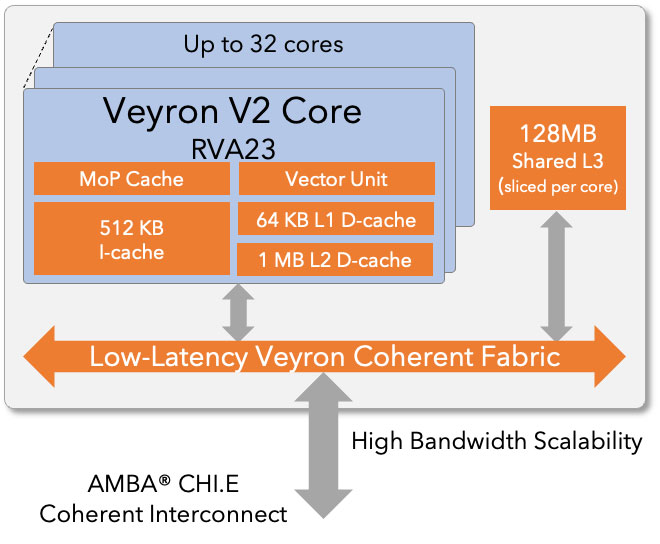

Advanced Cache & Cluster Architecture

- 1.5 MB private L2 cache per core

- Shared L3 cache configurable from 1–4 MB per core (up to 128 MB per cluster)

- Low-latency coherent cluster fabric

- High-bandwidth shared resources optimized for multithreaded workloads

Server-Class Reliability, Virtualization, and Optimization

- Full architectural virtualization support for cloud-native workloads

- Comprehensive RAS:

- ECC on all caches and functional RAMs

- End-to-end data poisoning protection

- Background error scrubbing and logging

- Built-in side-channel attack mitigation

- Comprehensive performance profiling and tuning support

Flexible IP Integration for Custom SoCs

- Clean, portable RTL – no custom macros or proprietary RAMs

- Modular multi-core cluster design for high-core-count scaling

- Integration-ready with standardized IP interface

Chiplet Integration & Packaging

- Standardized chiplet interfaces:

- Ventana D2D multi-protocol controller

- UCIe PHY for chiplet-based system integration

- Compatible with cost-effective organic packaging for volume deployment

- Configurable TDP for deployment across power-performance targets

- Turbo profile management with real-time power behavior control

- Digital power models at both core and cluster level for dynamic scaling

Veyron V3

Key Upgrades Over Veyron V2

- 11+ SPECint2017 (rate=1) at up to 4.2 GHz

- Enhanced RISC-V standard matrix extension support alongside RVV 1.0 and scalar compute

- 24 TeraFLOPS/core of FP8 matrix compute for AI/ML acceleration, or up to 4.5 PFLOPS in a 192-core chiplet-based SiP

- Significantly higher IPC and performance-per-watt, driven by new microarchitecture innovations

Next-Level Microarchitecture: Wide Efficiency via Macro-Ops

- Macro-op optimized design:

- Internal macro-ops encode 1–5 RISC-V instructions

- Advanced fusion engine dynamically creates optimized macro-ops from hot instruction sequences

- Acts much wider than it looks — macro-ops magnify effective decode width, backend capacity, and parallelism without physically increasing resources

- Achieves high performance and power efficiency without brute-force frontend or backend scaling

- Hardware-optimized for software-transparent ILP improvements and shorter execution paths

Superscalar Execution and Predictive Throughput Architecture

- 16 execution pipelines and schedulers:

- 5 integer, 3 load/store, 3 scalar FP, 5 vector/matrix

- 200+ scheduler entries, large resource queues and buffers

- Sophisticated branch and memory prediction engines:

- Multiple primary and secondary branch predictors

- Load value predictor, memory dependency and bypass prediction

Cluster Architecture and Coherency Fabric

- 32-core cluster design with 128MB L3 cache

- Upgraded high-bandwidth, low-latency coherent fabric enables efficient cluster scaling

Compute Density and AI Performance

- Unified scalar/vector/matrix execution model

- Exceptional AI compute density with FP8 matrix engine

- Optimized for inference acceleration at scale

Physical Design and Packaging

- Frequency-optimized physical implementation:

- Semi-custom place-and-route for timing-critical logic

- Custom standard cells and SRAM macros on advanced process node

- Support for UCIe-based D2D,

- Optional 3D stacking and direct memory attach

Careers